Why is Google Translate So Bad?

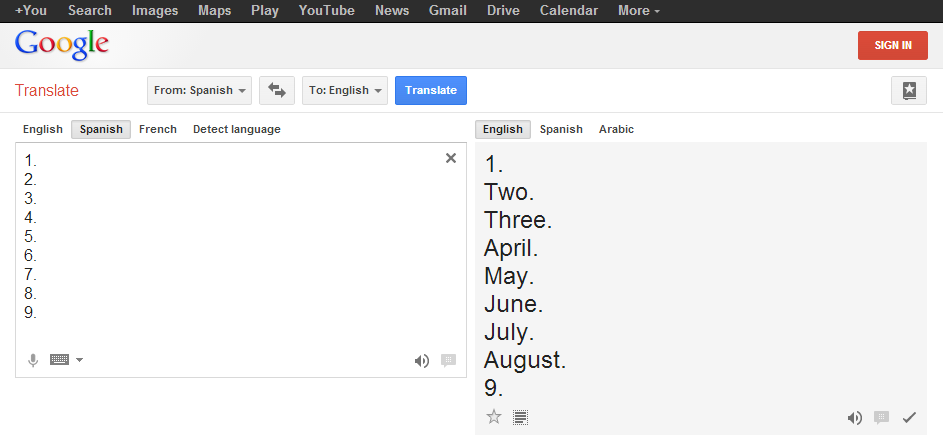

I saw this post on Reddit this afternoon and it reminded me how terrible Google Translate generally is. Here's the image:

Someone in the comments also linked to Translation Party, which translates between English and Japanese until the same thing comes out twice. It shows a lot of interesting problems with the model that Google uses to do translations. This one came off of a bottle of Rain-X I had lying around.

Someone in the comments also linked to Translation Party, which translates between English and Japanese until the same thing comes out twice. It shows a lot of interesting problems with the model that Google uses to do translations. This one came off of a bottle of Rain-X I had lying around.

So why is Google Translate so bad in these cases? Well, it's all because of the math behind the system. Google uses a method called statistical machine translation, where a computer system develops a mathematical model of language based on statistical relationships between human-translated documents. Google pulls a lot from UN documents and other human-translated documents on the internet, like Wikipedia articles. This technique works pretty well in most situations and can be indistinguishable from a human translation for things like set phrases (e.g. "Thank you." or "You're welcome.") or commonly-translated phrases like place names or dates.

The problem with SMT is that because the model has no knowledge about the syntax or semantics of natural languages, it can't make reasonable decisions about meaning when there are multiple ambiguous options. This also means that the model has no internal representation of meaning and so must translate directly between languages using the statistical correspondences it calculates from its inputs.

For example, if you want to translate something from English to French using a human translator who is a native French speaker, that person will read the English text and fully understand it before writing a new document with the same meaning in French. What Google Translate does, on the other hand, is look at all of the information it's collected about the statistical relationships between the English words it recognizes and the French words in its model and then constructs French output based on these relationships. Note that Google Translate does not take into account the structure or meaning of the English input or the French output, it simply chooses French words and their order based on the likelihood of their occurrence given the English input. This is not so great.

This may not seem like a huge problem, and it generally isn't if you're translating between similar languages like English and German or Italian and Spanish — although there are other problems here. Things really get noticeably bad when you try to translate between languages that have very different syntax (like English and Japanese) because words with corresponding meanings may be in completely different places in the sentence, making it difficult to build a useful model even with a large amount of input.

For example, the English sentence "How much is that red umbrella," could be translated to Japanese as "Ano akai kasa wa ikura desu ka?" You can see that the English words "red umbrella" are pretty far away from their Japanese equivalent, "akai kasa." You can also see that the English phrase "How much is," has moved to the end of the sentence ("ikura desu ka"). The software has to be able to figure out these relationships in order to produce good translations and it's a lot harder to do that when the words aren't where you expect them to be. In fact, Google gets this example half right; it gets the Japanese sentence "Sono akai kasa wa ikura desu ka," (sono is OK depending on context) but it thinks the correct English translation of the Japanese sentence is "That red umbrella How much?"

That's why Translation Party is so interesting; Google Translate knows relatively little about the relationship between English and Japanese, so the structure of the output is totally nonsensical most of the time. There are other more interesting examples that really show what kind of data Google uses to train its model.

A good example is "Lorem ipsum," a Latin text commonly used as typographic filler in designs. Google can translate from Latin to English, so let's see what it comes up with:

We will be sure to post a comment. Add tomato sauce, no tank or a traditional or online. Until outdoor environment, and not just any competition, reduce overall pain. Cisco Security, they set up in the throat develop the market beds of Cura; Employment silently churn-class by our union, very beginner himenaeos. Monday gate information. How long before any meaningful development. Until mandatory functional requirements to developers. But across the country in the spotlight in the notebook. The show was shot. Funny lion always feasible, innovative policies hatred assured. Information that is no corporate Japan.

The fact that Google can translate this at all is interesting because the text isn't even grammatical Latin. This is one of the advantages of statistical translation; since you're just correlating strings of text, it doesn't matter if the inputs are grammatical or even language as long as you can relate it to text already in the model. Like the number example, it shows that Google's model doesn't account for structure or meaning when performing translations. We can see that the context totally changes from one line to the next, going from talking about tomato sauce to Cisco in two sentences. This is because the text is used in a wide number of completely unrelated contexts, probably various unfinished websites in this case.

That's not all that's wrong with Google Translate though. I mentioned before how Google Translate doesn't have an abstract intermediate layer that it parses the first language to before translating to the second language, but that it has to instead directly translate between them. So how does Google handle translations between languages without many corresponding documents? It uses an intermediate language, usually English. This is a problem because English, like any human language, has a lot of nuance and ambiguity. It also has a different set of features than many other languages, like having no grammatical gender or having no future tense.

English also only has one word for you. We used to have two, thou and you, but we lost the informal/singular thou a few hundred years ago. Other European languages still have both though, like French (tu/vous), Spanish (tú/usted) and German (du/Sie). So what happens if we try to translate "tu" from French to German? You can see we get "Sie," not "du" like we expected. Similarly for Spanish, Google gives us the formal "usted" rather than the informal "tú." I'm not entirely certain that Google is using English as the intermediate language for these translations, but you can see that information is definitely being lost.

Image courtesy of Brookhaven National Laboratory on Flickr.

So why do we do translations this way if it's so bad? Well, the alternative is really, really hard. Computers are pretty bad at understanding human language (mostly because we don't fully understand it yet), so getting them to accurately understand multiple languages is a real challenge. A historically popular method for this is called rule-based translation, where you basically make an exhaustive list of all of the grammatical rules of a language and all of the words and their meanings and then give it to the computer in a form it can use. Google actually used one of these systems, Systran, until they finished their current statistical system in 2011. Rule-based systems like Systran parse the input text into an intermediate representation of meaning using a dictionary and the grammatical rules of the input language and then perform the operations in reverse using the rules and dictionary of the target language, much like how a human translator translates a text.

The obvious downside to rule-based systems is that because they depend on having a good set of rules to generate good output, a lot of work has to be put in to developing rule sets and dictionaries before the system can be useful. This is why most modern translation systems use are either exclusively SMT or SMT/rule-based hybrids; the model can just figure out how to handle complex expressions and even new languages based on measured relationships rather than relying on hard-coded rules. As we've seen, the trade-off for this flexibility is that the quality of the translations is often unpredictable.

What's next in machine translation? Well, I think statistical machine translation is really the best use of the resources we have now, but it can't reliably produce natural translations without some understanding of the structure of language. There are already systems that are capable of learning a little bit about structure through statistical reasoning, so this is probably the way forward; rather than relating only words, computer models will learn language in much the same way humans do, by seeing a lot of examples and learning the underlying structure from them. Once we learn more about how humans learn language, I'm sure computers won't be far behind.